I’m gonna go off on what seems like a tangent. my perception of the situation probably errs on the side of thinking it worse than it is. there are however clear limits to the math and the practice.

you stated Z difference of “2 cm”, but how much baseline/IPD?

yes, that is for illustration purposes, “not to scale” I’d say.

mathematically, those points are always “in view” because the field of view has no reason not to approach 180 degrees.

in the case of the general multi-view situation (SFM etc), it’s fine to have those points be practically in view, and even has advantages. optical axes crossing at right angles would give the math the best possible condition.

for block matching, which is a stereo situation, it’s actually misleading, a bad situation to have. by that I primarily mean Z differences (“side-eyed”) but also severely “cross-eyed” setups. these only differ in which eye goes “cross” in what direction. the more “cross”, the worse.

the epipole in view… that means you could see one of your eyes with your other eye (remove nose to demonstrate), that severely cross-eyed. imagine trying to rectify such a view. imagine the homography. you have that vanishing point in view, and now you’re supposed to put that off to the side (“maps the epipole to a point at infinity”), produce a top-down view (parallel epilines).

imagine a front-facing camera in a car, on a straight road. the vanishing point of the road is in view. now try homographing that to a top-down view.

the situations are equivalent.

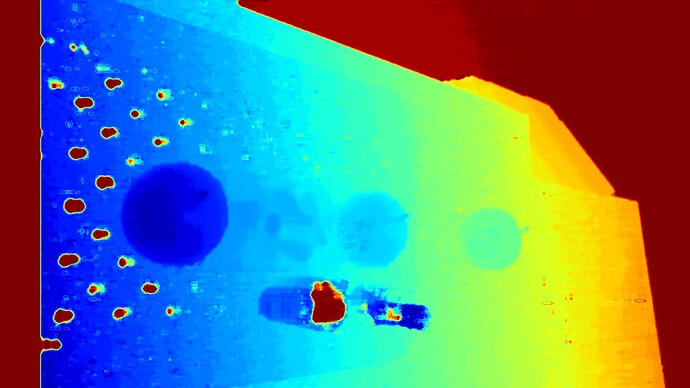

stealing pics from elsewhere:

so YES, if the goal is block matching for stereo vision, then avoid any such situations.

supposing the starting situation is not as severe, then you can work with that. the newly calculated views (camera matrices) still have some properties to be aware of (principal point relative to image bounds, …). it’s not like you still look at the target straight on. if those were your eyes, your fovea/foveae would be out of work very quickly. you’d look at the target peripherally.

slight Z differences, as in Fig. 9.3 in Steve’s post, can be corrected, but that is still a materially (if slightly) worse starting situation than no Z difference. it’s a correction of a suboptimal situation, not simply a normalization/transform of a fine situation.

I’m thumbing through the book for any discussion of block matching for stereo vision. there is a little bit written on the geometry of it in chapter 11.12. not enough to help you practically or convey any intuition. that is an exercise to the reader.

yet the example images in the book don’t show severe examples. they show some in-plane rotation, a bit of cross-eyeing, but nothing where the epipole comes near being in view.

that’s what I take issue with. it’s a math book only, not helping with practical aspects. the book makes those claims without actually demonstrating them. do not just buy into that. printed word does not win arguments by virtue of having been printed. the arguments have to convince. I believe I demonstrated what is even in the realm of entertainable positions with respect to the situation.

part from the book:

Since the application of arbitrary 2D projective transformations

may distort the image substantially, the method for finding the pair of transformations

subjects the images to a minimal distortion.

that is waffling. non sequitur. prime example of my disdain for “academic” writing. the antithesis to technical writing. that phrase alone should shake anyone reading the paragraph into critical reception. the transform is determined (in non-degenerate cases), i.e. the perspective part of it. there is no “minimal distortion” solution to choose from any solution space. any degrees of freedom left are non-consequential to quality: zoom/scale/stretch, translation and rotation. at most, one should caution to pick those degrees of freedom pragmatically (i.e. not scaling down to thumbnail size or squashing comically).