Hello, I am trying to do Hand eye calibration with the new OpenCV functions provided in the 4.1 version.

I currently have a camera/lidar (Intel realsense L515) mounted to the end effector/flange of an UR5-e 6DOF robot manipulator.

Below are my current setup, where you can see my camera mounted on the Robot manipulator.

I have troubles in obtaining correct results.

My Results so far: (units in mm and deg)

R_cam2gripper_rT =

[-9.04785, 12.109, -52.0667]

R_cam2gripper_HORAUD =

[0.03450308721334205, 0.902145724021868, 0.4300495664476459;

-0.9846981745094945, 0.1042351288812738, -0.1396586661284344;

-0.1708187404543711, -0.4186503678920681, 0.891937681328364]

R_cam2gripper_rH =

[-25.1441, 9.83543, -87.9933]

R_cam2gripper_PARK =

[0.03805064311332489, 0.9018297586234388, 0.4304128657694273;

-0.9862517484060972, 0.1032072572000317, -0.12905716108439;

-0.16080931976903, -0.4195847334239353, 0.8933582787174394]

R_cam2gripper_rP =

[-25.1581, 9.25388, -87.7906]

R_cam2gripper_ANDREFF =

[0.9397345685222583, -0.1627515954024283, 0.3006839851376348;

-0.2928750515844619, 0.07059643955182285, 0.953540951864158;

-0.1764175299784119, -0.9841382326261742, 0.01867603276250016]

R_cam2gripper_rA =

[-88.9129, 10.1612, -17.31]

R_cam2gripper_DAN =

[-nan, -nan, -nan;

-nan, -nan, -nan;

-nan, -nan, -nan]

R_cam2gripper_rD =

[-nan, -nan, -nan]

t_cam2gripper_TSAI =

[10.98487487474116;

-33.02969553096816;

223.2632618335024]

t_cam2gripper_HORAUD =

[-10.71180214365059;

-46.938158399302;

183.2681549948555]

t_cam2gripper_PARK =

[-10.20332151779871;

-47.77303083896501;

183.5396204863184]

t_cam2gripper_ANDREFF =

[-31.08091355367419;

10.24423282567718;

378.0316687426363]

t_cam2gripper_DAN =

[-nan;

-nan;

nan]

I have roughly estimated the results to a pose vector of:

[0, 0, 60, 0, 0, 135] [X, Y, Z, RX, RY, RZ]

From Robot API:

Actual Cartesian coordinates of the tool: (x,y,z,rx,ry,rz), where rx, ry and rz is a rotation vector representation of the tool orientation

Here are the link to my images, Robot poses and my code so far.

Images, Robot Poses [X,Y,Z,RX,RY,RZ] .

Output of my function are: ( Sorry for the long output)

T =

[0.032447327, 0.99877787, 0.037282467, 33.506855;

-0.99907005, 0.033471372, -0.027179375, 12.694229;

-0.028394053, -0.0363659, 0.9989351, 554.77515;

0, 0, 0, 1]

T =

[0.033374161, 0.8556391, 0.51649582, -309.20471;

-0.9980914, 0.055399925, -0.027283626, 45.892395;

-0.051958766, -0.51459944, 0.85585493, 613.30365;

0, 0, 0, 1]

T =

[-0.0095516145, 0.8623035, 0.5063017, -123.69634;

-0.99777102, -0.041659582, 0.052128758, 68.836143;

0.066043131, -0.50467527, 0.8607794, 416.43491;

0, 0, 0, 1]

T =

[-0.20632681, 0.81512898, 0.54128921, 42.913048;

-0.92579246, 0.01645742, -0.37767375, 52.596439;

-0.31676105, -0.57904571, 0.75124466, 594.59149;

0, 0, 0, 1]

T =

[0.012495521, 0.99147469, 0.12969905, -95.775612;

-0.90882617, 0.065352567, -0.41202432, 11.767408;

-0.41698784, -0.11272543, 0.90189475, 467.67084;

0, 0, 0, 1]

T =

[-0.0030205173, 0.96989256, 0.24351457, -124.27053;

-0.89622313, 0.10539681, -0.43090087, 61.395798;

-0.4435932, -0.21954495, 0.86892182, 541.08423;

0, 0, 0, 1]

T =

[-0.79455251, 0.60688567, -0.019394122, -44.286865;

-0.51012051, -0.68450755, -0.52079409, 117.15798;

-0.32933789, -0.40390489, 0.85346198, 470.72272;

0, 0, 0, 1]

T =

[0.46751362, 0.84215909, -0.26869875, -167.1772;

-0.88398468, 0.44588464, -0.14056288, 10.802905;

0.0014323442, 0.30324066, 0.95291293, 396.17206;

0, 0, 0, 1]

T =

[0.61822659, 0.77588987, 0.1256613, -7.5792241;

-0.72428077, 0.62445444, -0.29235947, -53.953945;

-0.30530852, 0.089730345, 0.94801646, 626.52856;

0, 0, 0, 1]

T =

[0.18281206, 0.91443706, 0.36108813, 57.240898;

-0.95419985, 0.25349757, -0.15887626, -63.850857;

-0.2368173, -0.31550571, 0.91889811, 697.77399;

0, 0, 0, 1]

T =

[-0.011332119, 0.99938422, 0.033207085, 51.272999;

-0.99982101, -0.010821423, -0.015518709, 143.21815;

-0.015149806, -0.033376999, 0.99932802, 544.06097;

0, 0, 0, 1]

T =

[-0.31214634, 0.89216, -0.32651976, -243.96638;

-0.94377542, -0.33058572, -0.0010391828, 135.71184;

-0.10886988, 0.30783695, 0.94518983, 582.81262;

0, 0, 0, 1]

T =

[-0.025150575, 0.99948394, 0.019981407, -269.61829;

-0.99965096, -0.024983024, -0.0085911434, 145.56754;

-0.008087514, -0.020190503, 0.99976343, 550.43463;

0, 0, 0, 1]

T =

[-0.031009046, 0.9989211, 0.034570154, -102.91589;

-0.99929464, -0.03171647, 0.020106304, 73.610458;

0.021181056, -0.033922292, 0.99919999, 408.36877;

0, 0, 0, 1]

T =

[-0.029887864, 0.99886602, 0.03705902, -185.14394;

-0.99658823, -0.032632314, 0.07580924, 51.447205;

0.076932594, -0.034666806, 0.99643344, 329.34799;

0, 0, 0, 1]

T =

[-0.019084895, 0.85671061, 0.51544416, -73.9217;

-0.99871296, -0.040565651, 0.030444991, 72.056427;

0.046991874, -0.51419973, 0.85638219, 421.37299;

0, 0, 0, 1]

T =

[-0.29408774, 0.78182018, 0.54979056, 38.124172;

-0.94976497, -0.30347314, -0.076488808, 115.83392;

0.10704616, -0.54466623, 0.83179313, 571.72388;

0, 0, 0, 1]

T =

[0.42643368, 0.78748894, 0.44498935, -72.440544;

-0.90450132, 0.36819404, 0.21519867, -27.132071;

0.0056241401, -0.49426141, 0.86929512, 538.45984;

0, 0, 0, 1]

cameraMatrix :

[1370.818139621905, 0, 961.890790739307;

0, 1370.095440715639, 560.0205755273522;

0, 0, 1]

distCoeffs : 0.147587 -0.384591 0.00503988 -0.000217179 0.189602

Rotation vector :

[0.002170664666822091, 0.06932362248330737, -1.539190640739083;

-0.3808597290643198, 0.4682954841038636, -1.474972237488654;

-0.4585655895978568, 0.3816788882104497, -1.551907978444677;

-0.1775048219446878, 0.8012827726040731, -1.594501959989459;

0.2430549492342756, 0.4444588417883144, -1.50039717448852;

0.1750173836859757, 0.5576463409644808, -1.476076704479983;

0.2546885977161631, 0.6870855643740165, -2.412978021775229;

0.2875852892379269, -0.1625563682265382, -1.076436236284204;

0.231928871475526, 0.2582551986403374, -0.8712181046923483;

-0.1127565722196006, 0.4301441543443595, -1.318942687685679;

-0.005137373812516877, 0.0508140280749047, -1.583598662365615;

0.3235575795627225, -0.2085396984492167, -1.888271694371879;

-0.0006892155765055306, 0.03736235864201979, -1.593555910536962;

-0.03889057347225315, 0.01745553732538511, -1.601684667119183;

-0.07573291051041284, -0.02091686541699921, -1.59909976439389;

-0.4518561064950632, 0.4079160849869857, -1.555512592244226;

-0.4924010172243078, 0.4864661386170854, -1.836463993750586;

-0.4541223999911563, 0.3002489869496504, -1.102064873001999]

Translation vector :

[35.86166194331239, 4.766750261391213, 557.0446135682984;

-306.5830050217075, 36.48475246344285, 615.2849439317491;

-122.0420296666905, 62.81856643684601, 418.6982975094964;

45.31403122380313, 44.13379923317115, 596.3854834252789;

-93.88034260697218, 5.036532332336537, 469.5894168428511;

-122.0825593757022, 53.62643338353875, 543.7450038677171;

-42.46075583725683, 110.501244681195, 473.5395887381977;

-165.3201671608492, 5.005215033674919, 396.9213790288188;

-5.058684221403818, -62.88804002900386, 627.8024296962734;

59.91290351375584, -73.74024410089586, 698.1717025422383;

53.56354529336077, 135.4106711107449, 546.4882854206396;

-241.4921224606571, 126.9769514933783, 585.4866173034209;

-267.3333604555122, 137.20445665623, 553.4421892542297;

-101.2464884162305, 67.70981879200173, 410.1426752900625;

-183.8934329828842, 46.37625320499004, 330.9137620012634;

-72.1348487838419, 66.0611406024772, 423.5543216221984;

40.52514100614005, 107.641239375997, 574.2645546811119;

-70.14045746789145, -34.79261018727873, 539.9966685977743]

Size = 18 standard deviation intrinsics =

2.85221 2.80861 2.18894 1.92603 0.0061204 0.0399352 0.000530538 0.000557114 0.0803222 0 0 0 0 0 0 0 0 0

Size = 108 standard deviation extrinsics =

0.0032494 0.00356233 0.000445387 0.904113 0.782061 1.24712 0.00211988 0.00210286 0.000810539 1.03445 0.918205 1.41454 0.00175247 0.00179261 0.000576841 0.661965 0.592247 0.850572 0.00196486 0.00206846 0.000581539 0.951807 0.834042 1.14703 0.00193962 0.00208886 0.000412434 0.738158 0.66123 0.990165 0.00181792 0.00200799 0.000547039 0.859894 0.767068 1.11678 0.00214 0.00212912 0.000659397 0.752602 0.662655 0.905763 0.00211443 0.00247152 0.00041957 0.649801 0.573345 0.935687 0.0029152 0.00325071 0.000436454 1.01112 0.886116 1.4642 0.00451103 0.00492972 0.000828549 1.13182 0.982622 1.5623 0.00338521 0.00303265 0.000482351 0.903757 0.771 1.23632 0.00554276 0.00474313 0.00111917 0.946608 0.855244 1.47541 0.00283955 0.00329577 0.000493451 0.904439 0.822891 1.39312 0.00248716 0.00246055 0.00024157 0.65578 0.580782 0.901768 0.00236557 0.00224477 0.000301161 0.528141 0.495061 0.845201 0.00177053 0.00183636 0.000527911 0.668973 0.590684 0.846739 0.00239404 0.00239742 0.00067075 0.925069 0.804102 1.17204 0.00187739 0.00198966 0.000583066 0.859002 0.75259 1.10031

Size = 18

RMS re-projection error estimated for each pattern view =

0.255986 0.5133 0.553166 0.359319 0.27982 0.376429 0.49861 0.28564 0.238257 0.26081 0.368832 0.233498 0.355354 0.268055 0.581164 0.378732 0.346973 0.351392

Avg_RMS = 0.361408

theta 1

[-1.25176;

2.81198;

-0.052189302]

theta 2

[-1.19806;

2.8584599;

-0.039006598]

theta 3

[-1.17857;

2.8594401;

-0.0073111798]

theta 4

[-1.1819299;

2.90064;

-0.012464]

theta 5

[1.17334;

-2.87885;

-0.016700801]

theta 6

[1.0776;

-2.6498301;

0.69110799]

theta 7

[0.66794699;

-2.7711401;

0.77122003]

theta 8

[1.63858;

-2.2959199;

0.590119]

theta 9

[1.21279;

-2.6637499;

0.751881]

theta 10

[1.08145;

-2.6414101;

0.66040099]

theta 11

[-0.96832401;

2.63784;

-1.02718]

theta 12

[-1.13098;

2.48735;

-0.37626001]

theta 13

[-1.14547;

2.47054;

-0.54200602]

theta 14

[0.15192699;

2.66116;

-0.54946798]

theta 15

[-1.7460999;

2.3099999;

0.39245901]

theta 16

[-1.92256;

2.05778;

-0.165609]

theta 17

[-1.49303;

2.6093299;

-0.58735102]

theta 18

[-0.66910303;

2.81091;

0.42322999]

robot_rot_01

[-0.66765684, -0.7410363, 0.071411327;

-0.74317575, 0.66907537, -0.0052826796;

-0.043864902, -0.056598183, -0.99743295]

robot_rot_02

[-0.70045865, -0.71204883, 0.048416737;

-0.71310478, 0.70102268, -0.0069829007;

-0.028969062, -0.039417442, -0.99880284]

robot_rot_03

[-0.70855808, -0.70409328, 0.046883691;

-0.70432383, 0.70973653, 0.014213318;

-0.043282568, -0.022950338, -0.9987992]

robot_rot_04

[-0.71518344, -0.69883925, 0.01167905;

-0.69891381, 0.71519566, -0.0038347535;

-0.0056729289, -0.010905202, -0.99992442]

robot_rot_05

[-0.71464407, -0.69864219, -0.034393743;

-0.69899422, 0.71512324, -0.0024189802;

0.026285768, 0.022312317, -0.99940544]

robot_rot_06

[-0.71479088, -0.69929838, -0.0074709128;

-0.60657215, 0.62525636, -0.49104449;

0.34805787, -0.34646249, -0.87110245]

robot_rot_07

[-0.88084751, -0.46972913, -0.058840185;

-0.37179908, 0.76337469, -0.52822769;

0.29304105, -0.44341135, -0.84706157]

robot_rot_08

[-0.33065325, -0.94344038, 0.024263402;

-0.83820999, 0.28176433, -0.4669185;

0.43367317, -0.17472595, -0.8839674]

robot_rot_09

[-0.67185366, -0.73473001, 0.093725428;

-0.67529935, 0.55563867, -0.48501182;

0.30427527, -0.38914967, -0.8694706]

robot_rot_10

[-0.70813632, -0.70562863, -0.025122082;

-0.61077911, 0.63002473, -0.47960162;

0.35424817, -0.32427931, -0.87712663]

robot_rot_11

[-0.78046811, -0.51630265, 0.35256356;

-0.61876571, 0.55723256, -0.55373359;

0.089434244, -0.65032566, -0.7543726]

robot_rot_12

[-0.60333025, -0.66168356, 0.44516009;

-0.76374447, 0.64006674, -0.08371941;

-0.2295364, -0.39049903, -0.89152879]

robot_rot_13

[-0.60494578, -0.64028507, 0.4733662;

-0.77964395, 0.59712344, -0.18867657;

-0.16185127, -0.4831962, -0.86042178]

robot_rot_14

[-0.90710497, 0.18676098, 0.37720144;

0.022090336, 0.91605777, -0.40043747;

-0.42032441, -0.35490632, -0.83508617]

robot_rot_15

[-0.27047762, -0.96263158, 0.013499573;

-0.9041605, 0.25881472, 0.33986583;

-0.33065948, 0.079720326, -0.94037706]

robot_rot_16

[-0.043794166, -0.95043004, 0.30783886;

-0.98742807, 0.088023737, 0.13129213;

-0.15188111, -0.29821891, -0.94233626]

robot_rot_17

[-0.52249575, -0.8141129, 0.25341344;

-0.84417498, 0.45215064, -0.28797284;

0.11986136, -0.36438987, -0.92350054]

robot_rot_18

[-0.87189603, -0.46751767, 0.14568631;

-0.40389141, 0.85478991, 0.32589245;

-0.27689168, 0.22530289, -0.93411434]

Translation 1

[711.39697;

-130.51599;

531.64398]

Translation 2

[610.10999;

-144.93901;

517.66003]

Translation 3

[614.638;

124.095;

517.73102]

Translation 4

[692.29401;

-16.318501;

400.927]

Translation 5

[728.55096;

51.480301;

331.66501]

Translation 6

[700.69897;

155.37801;

323.10599]

Translation 7

[691.328;

183.174;

464.776]

Translation 8

[734.32001;

203.985;

414.177]

Translation 9

[659.77197;

410.70898;

357.76401]

Translation 10

[711.00494;

186.129;

301.49902]

Translation 11

[509.62598;

181.15001;

424.94901]

Translation 12

[539.33301;

15.105901;

394.89001]

Translation 13

[460.16202;

95.829201;

399.26401]

Translation 14

[561.37701;

153.61;

338.465]

Translation 15

[667.979;

-120.53799;

411.591]

Translation 16

[588.79401;

-136.30099;

574.21399]

Translation 17

[652.28302;

64.361801;

628.51099]

Translation 18

[637.00403;

-69.146698;

590.25195]

18 R_gripper2base

18 t_gripper2base

18 R_target2cam

18 t_target2cam

R_cam2gripper_TSAI =

[0.6010658302958732, 0.7586336330819049, 0.2513859152929658;

-0.7711780564918385, 0.6331136023054994, -0.06672010012900674;

-0.2097719543604516, -0.1537601292035861, 0.9655845637908121]

R_cam2gripper_rT =

[-9.04785, 12.109, -52.0667]

R_cam2gripper_HORAUD =

[0.03450308721334205, 0.902145724021868, 0.4300495664476459;

-0.9846981745094945, 0.1042351288812738, -0.1396586661284344;

-0.1708187404543711, -0.4186503678920681, 0.891937681328364]

R_cam2gripper_rH =

[-25.1441, 9.83543, -87.9933]

R_cam2gripper_PARK =

[0.03805064311332489, 0.9018297586234388, 0.4304128657694273;

-0.9862517484060972, 0.1032072572000317, -0.12905716108439;

-0.16080931976903, -0.4195847334239353, 0.8933582787174394]

R_cam2gripper_rP =

[-25.1581, 9.25388, -87.7906]

R_cam2gripper_ANDREFF =

[0.9397345685222583, -0.1627515954024283, 0.3006839851376348;

-0.2928750515844619, 0.07059643955182285, 0.953540951864158;

-0.1764175299784119, -0.9841382326261742, 0.01867603276250016]

R_cam2gripper_rA =

[-88.9129, 10.1612, -17.31]

R_cam2gripper_DAN =

[-nan, -nan, -nan;

-nan, -nan, -nan;

-nan, -nan, -nan]

R_cam2gripper_rD =

[-nan, -nan, -nan]

t_cam2gripper_TSAI =

[10.98487487474116;

-33.02969553096816;

223.2632618335024]

t_cam2gripper_HORAUD =

[-10.71180214365059;

-46.938158399302;

183.2681549948555]

t_cam2gripper_PARK =

[-10.20332151779871;

-47.77303083896501;

183.5396204863184]

t_cam2gripper_ANDREFF =

[-31.08091355367419;

10.24423282567718;

378.0316687426363]

t_cam2gripper_DAN =

[-nan;

-nan;

nan]

I don’t know what i’m doing wrong. I have tryed to analyze the images if there is a bad reprojection error (can be seen in my output).

I have also tryed to capture images and poses from different angles of the chessboard. In the end i have tryed to use other software which provided a different result. [0.006333618168 0.00842033441 0.008065033756, 2.6688916 0.4612792874 135.8936593] where the translation are in [m] and rotation are in [deg].

Sorry for the long post about my problem. Do anybody have an understanding why i’m getting bad results and the daniilidis aren’t outputting any results. I believe i’m having troubles in understanding the rotation conversion correctly, i’m currently using rodriguez from rotation vector to rotation matrix. Any help will be great!

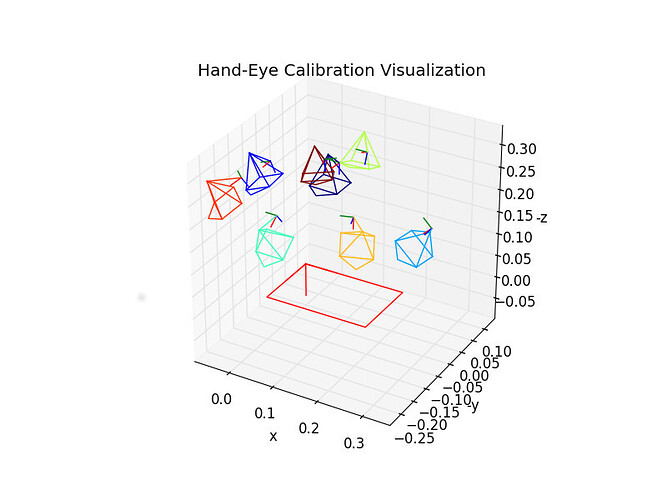

Some of the pictures are ‘Pointing into each other’ which makes no sense. I’m not completely sure on how to roughly estimate the transformation between the two poses?

Some of the pictures are ‘Pointing into each other’ which makes no sense. I’m not completely sure on how to roughly estimate the transformation between the two poses?