I am trying to get the year on a quarter. So for step 1, I’m trying to take an image of a coin and “straighten” it.

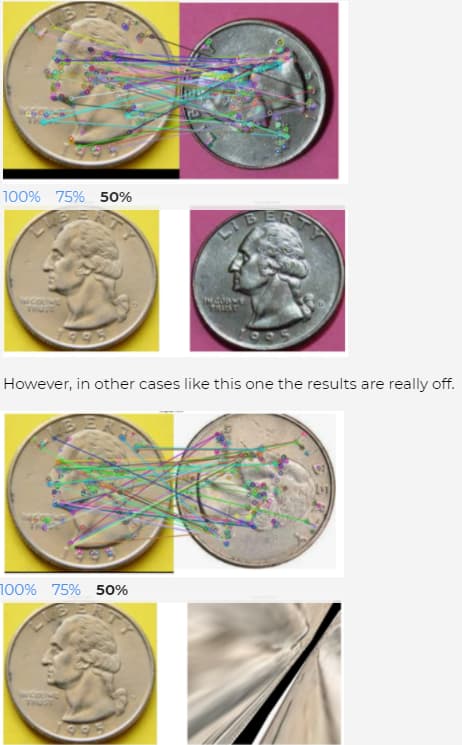

I found this video on how to detect image features to align an image. It works in some cases like this one.

Any ideas on what I can do here to properly align the images?

My Jupyter code and images can be found on the link below.

https://drive.google.com/drive/folders/1lcTuaKrxEGU1y03tgjDwhQobsjt-F8wO?usp=sharing

Added code:

from __future__ import print_function

import cv2

import numpy as np

import matplotlib.pyplot as plt

# Read reference image

refFilename = "Quarter_A.png"

print("Reading reference image : ", refFilename)

im1 = cv2.imread(refFilename, cv2.IMREAD_COLOR)

im1 = cv2.cvtColor(im1, cv2.COLOR_BGR2RGB)

# Read image to be aligned

imFilename = "Quarter_NA4.png"

#imFilename = "Quarter_NA.png"

print("Reading image to align : ", imFilename);

im2 = cv2.imread(imFilename, cv2.IMREAD_COLOR)

im2 = cv2.cvtColor(im2, cv2.COLOR_BGR2RGB)

plt.figure(figsize=[20,10]);

plt.subplot(121); plt.axis('off');plt.imshow(im1);plt.title("Original Form")

plt.subplot(122); plt.axis('off');plt.imshow(im2);plt.title("Scanned Form")

im1Gray = cv2.cvtColor(im1, cv2.COLOR_BGR2GRAY)

im2Gray = cv2.cvtColor(im2, cv2.COLOR_BGR2GRAY)

# Detect ORB features and compute descriptors.

MAX_NUM_FEATURES = 500

orb = cv2.ORB_create(MAX_NUM_FEATURES)

keypoints1, descriptors1 = orb.detectAndCompute(im1Gray, None)

keypoints2, descriptors2 = orb.detectAndCompute(im2Gray, None)

# Display

im1_display = cv2.drawKeypoints(im1,keypoints1,outImage= np.array([]), color = (255,0,0), flags = cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

im2_display = cv2.drawKeypoints(im2,keypoints2,outImage= np.array([]), color = (255,0,0), flags = cv2.DRAW_MATCHES_FLAGS_DRAW_RICH_KEYPOINTS)

plt.figure(figsize=[20,10])

plt.subplot(121); plt.axis('off'); plt.imshow(im1_display); plt.title("Original Form");

plt.subplot(122); plt.axis('off'); plt.imshow(im2_display); plt.title("Scanned Form");

matcher = cv2.DescriptorMatcher_create(cv2.DESCRIPTOR_MATCHER_BRUTEFORCE_HAMMING)

matches = matcher.match(descriptors1, descriptors2, None)

# Sort matches by score

matches.sort(key=lambda x: x.distance, reverse=False)

# Remove not so good matches

numGoodMatches = int(len(matches) * 0.10)

matches = matches[:numGoodMatches]

# Draw top matches

im_matches = cv2.drawMatches(im1, keypoints1, im2, keypoints2, matches, None)

plt.figure(figsize=[40,10])

plt.imshow(im_matches);plt.axis('off');plt.title("Original Form");

# Extract location of good matches

points1 = np.zeros((len(matches), 2), dtype=np.float32)

points2 = np.zeros((len(matches), 2), dtype=np.float32)

for i, match in enumerate(matches):

points1[i, :] = keypoints1[match.queryIdx].pt

points2[i, :] = keypoints2[match.trainIdx].pt

# Find homography

h, mask = cv2.findHomography(points2, points1, cv2.RANSAC)

#Use homography to warp image

height, width, channels = im1.shape

im2_reg = cv2.warpPerspective(im2,h,(width, height))

#Display results

plt.figure(figsize=[20,10])

plt.subplot(121); plt.imshow(im1); plt.axis('off'); plt.title("Original Form")

plt.subplot(122); plt.imshow(im2_reg); plt.axis('off'); plt.title("Scanned Form")

Thanks.

Rob